HKBU Physicist Reveals Brain’s Low-Precision Top-Down Generation Strategy Enhancing Visual Perception, Imagination, and Advancing Sketch-Generation AI Technique

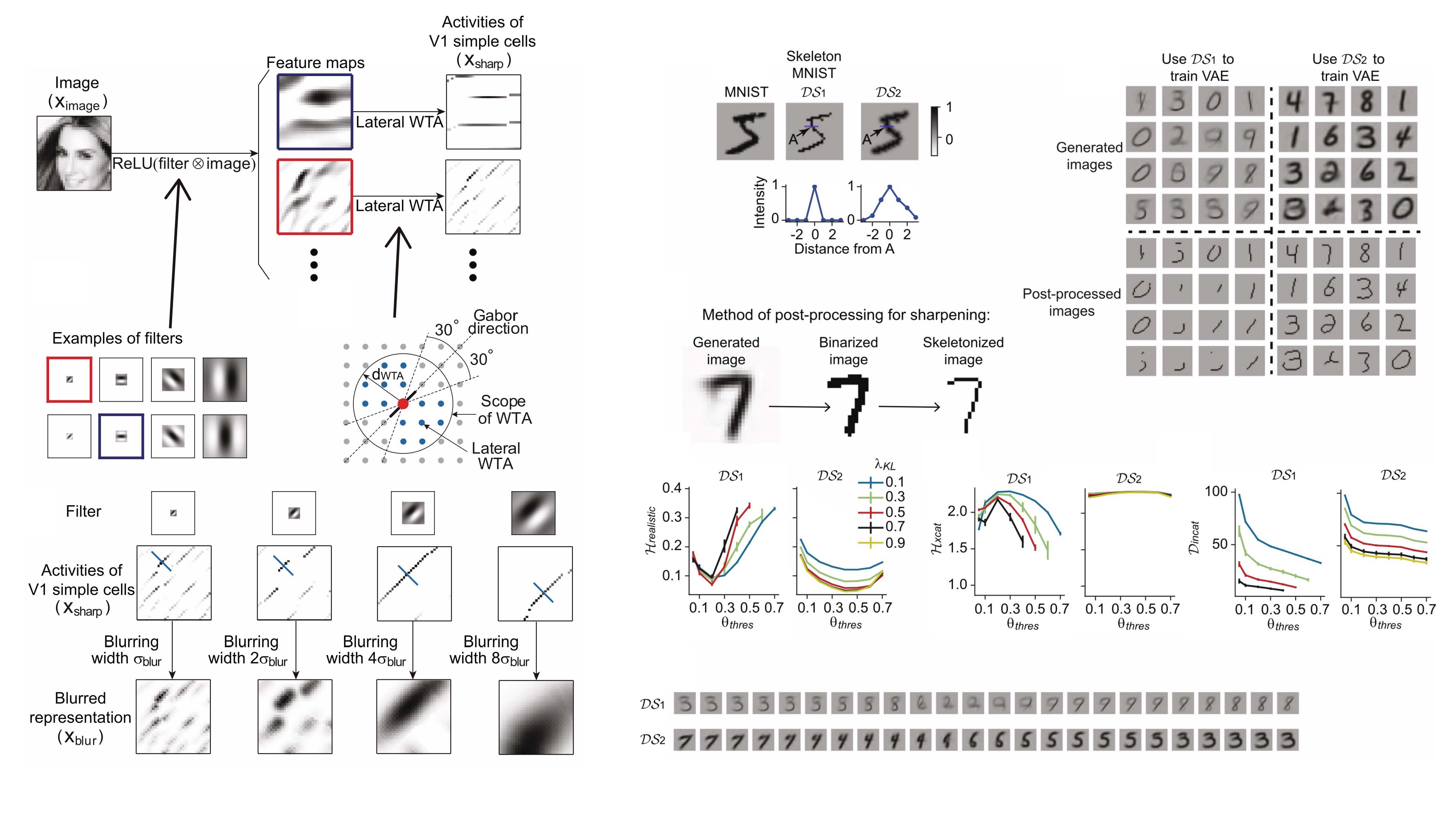

The computational model of the target representations and sketch-generation results on the skeleton MNIST dataset.

Perception or imagination requires top-down signals from high-level cortex to primary visual cortex (V1) to reconstruct or simulate the representations bottom-up stimulated by the seen images. Interestingly, top-down signals in V1 exhibit lower coding precision compared to the bottom-up representations. It remains unclear why the brain uses low-precision signals to reconstruct or simulate high-precision representations.

To address this problem, Dr. Liang Tian, Assistant Professor in the Department of Physics of HKBU, and his PhD Student, Mr. Haoran Li, co-worked with Dr. Zedong Bi, Assistant Professor in Lingang Laboratory in Shanghai. Together, they developed a framework to model the top-down pathway of the visual system. Their research revealed that low-precision top-down signals can more effectively reconstruct or simulate the information contained in the sparse activities of V1 simple cells, which facilitates perception and imagination. They also found that this advantage of low-precision generation is related to the facilitation of high-level cortex in forming geometry-respecting representations.

Moreover, based on these findings, they proposed a novel AI technique that significantly improves the generation quality and diversity of sketches, a style of drawings made of thin lines.

The research findings were published in Neural Networks (https://www.sciencedirect.com/science/article/pii/S0893608023007372) (Impact Factor: 9.66) under the title “Top-down generation of low-resolution representations improves visual perception and imagination”