The Nobel Prize and the Fusion of Physics and AI: A New Era of Transdisciplinary Innovation

Physics: Foundation for Machine Learning and AI

On October 8, 2024, the 2024 Nobel Prize in Physics was awarded to John Hopfield and Geoffrey Hinton for their pioneering contributions to artificial neural networks and machine learning. This recognition underscores the deep connection between physics and machine learning. Hopfield and Hinton’s work has applied key concepts from statistical physics to the design and optimization of neural networks, demonstrating how physical principles such as energy landscape, probabilistic modeling, entropy and information, and optimization have shaped artificial intelligence. Their discoveries have not only advanced both fields but also highlighted the potential of transdisciplinary approaches to tackling complex systems.

John J. Hopfield (left) and Geoffrey E. Hinton (right): © Nobel Prize Outreach

Physics, at its core, studies the laws governing matter, energy, and information, encompassing both real-world phenomena and abstract models that describe and predict complex behaviors. While physics addresses specific problems like the mechanics of falling objects, it also extends to broader challenges, such as optimizing neural networks. The physicist’s problem-solving approach—grounded in systematic reasoning, modeling, and a deep understanding of fundamental principles—enables them to explore problems beyond traditional domains. More fundamentally, both physics and machine learning analyze complex systems with many interacting elements, exploring emergent behaviors and universality through shared models.

John Hopfield and Geoffrey Hinton's Contribution

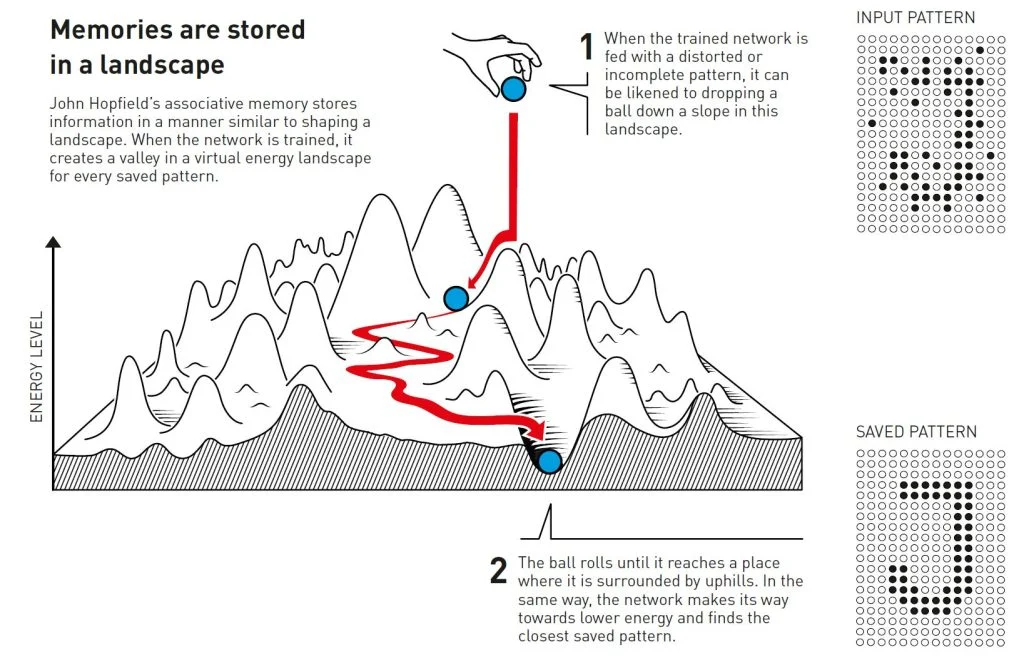

John Hopfield's seminal work in the early 1980s introduced the Hopfield network—a neural network architecture analyzed using an energy landscape framework [1]. These networks store patterns through stable states, much like physical systems, with a direct connection to thermodynamics offering a framework for understanding learning and memory. Hopfield's insights demonstrated that learning in neural networks mirrors the evolution of physical systems toward a state of minimum energy [2]. His work laid the theoretical foundation for associative memory, pattern recognition, and neural network processes, offering valuable tools for computational neuroscience and the study of brain function.

Geoffrey Hinton extended these ideas with the Boltzmann machine, where learning is modeled through probabilistic sampling and energy minimization, akin to particles reaching equilibrium in physical systems [3]. Hinton's contributions to the backpropagation algorithm enabled deep neural networks to learn effectively from large datasets, propelling deep learning into a dominant tool in AI [4, 5]. His work facilitated breakthroughs in computer vision, natural language processing, and many other AI applications, where deep networks extract abstract and complex features from data to solve real-world problems with unprecedented efficacy [6].

Physics and AI Research in Physics Department Promotes the University’s Transdisciplinary Innovation

Today, more physicists are engaging in machine learning research, applying their expertise in statistical mechanics, optimization, and complex system modeling to advance AI, while also leveraging AI to address physical challenges. This bidirectional exchange—"Physics of AI" and "AI for Physics"—creates new opportunities for advancing both fields, driving innovation and expanding the scope of scientific inquiry.

The Nobel Physics Prize awarded to Hopfield and Hinton underscores the transformative power of merging physics and machine learning. By integrating expertise in statistical mechanics, optimization, and complex systems modeling, this combination has established a robust framework for addressing scientific challenges. This cross-disciplinary approach has led to significant advancements in both AI and the physical sciences, highlighting the profound impact of collaborative efforts in solving complex problems. This interdisciplinary synergy is also central to our department’s research, where the fusion of physics, data science, and complex systems analysis and modeling drives innovation across a wide range of fields—from AI to biological systems. Our work exemplifies how physics principles can be applied to advance machine learning while deepening our understanding of complex biological processes.

For instance, Prof. Zhou Changsong’s research on neural dynamics and information processing and brain cognition and intelligence provides key insights into brain-inspired AI systems. His work on hierarchical brain organization, self-organized criticality, and neuronal firing patterns informs the design of efficient, layered AI models for human-like AI. Dr. Tian Liang focuses on interdisciplinary research in AI for complex systems by integrating principles from statistical physics, network science, systems biology, and advanced AI. His research aims to uncover emergent properties and organizational principles, ultimately advancing our understanding of complex systems in both nature and technology. Dr. Tang Qianyuan’s research leverages millions of AI-predicted protein structures to explore the relationship between protein folding, dynamics, and evolution. He examines how thermal noise and mutations affect these structures, addressing key challenges like parameter sensitivity in biological systems and neural networks.

Across our department, machine learning methods are applied to a wide range of fields. Prof. Shi Jade’s research on cancer therapeutics employs machine learning to analyze cell imaging data, revealing key insights into signaling pathways and uncovering new drug targets. Similarly, Dr. Zeng Xiangze’s studies on biomolecular dynamics use machine learning to explore the kinetics and thermodynamics of proteins and nucleic acids, uncovering molecular mechanisms that drive biomolecular function, and discover new drug molecules. By combining physics with machine learning, their work contributes to breakthroughs in health, disease treatment, and technology.

Through these interdisciplinary efforts, physics has extended its boundaries, driving innovation in areas once considered beyond its traditional scope. Our department is at the forefront of this expansion, integrating physics principles into fields such as AI, neuroscience, health, and technology. By applying the rigorous frameworks of physics to complex systems, we are not only advancing scientific understanding but also creating transformative solutions with wide-ranging implications. This ongoing collaboration continues to break new ground, showcasing the powerful synergy between physics and machine learning in addressing some of today’s most pressing challenges.

References

[1] Hopfield, J. J. (1982). Neural networks and physical systems with emergent collective computational abilities. Proceedings of the National Academy of Sciences, 79(8), 2554-2558.

[2] Hopfield, J. J. (1984). Neurons with graded response have collective computational properties like those of two-state neurons. Proceedings of the National Academy of Sciences, 81(10), 3088-3092.

[3] Ackley, D. H., Hinton, G. E., & Sejnowski, T. J. (1985). A learning algorithm for Boltzmann machines. Cognitive Science, 9(1), 147-169.

[4] Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323(6088), 533-536.

[5] Hinton, G. E., & Salakhutdinov, R. R. (2006). Reducing the dimensionality of data with neural networks. Science, 313(5786), 504-507.

[6] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, 25, 1097-1105.

Source: https://www.nobelprize.org/prizes/physics/2024/press-release/